In today's rapidly evolving technological landscape, artificial intelligence (AI) stands at the forefront, promising to revolutionize countless aspects of our lives. But somehow, a pivotal question arises: is AI propelling humanity towards new heights or leading us down a perilous path?

In a previous article, we explained the inflexion point reached by AI, with the amazing unfolding of large language models’ potential to become a major game-changer for the world as we know it. Generative AI, at its most sophisticated yet best-known expression - ChatGPT - allows for the creation of original content by learning from existing data, revolutionizing industries and transforming the way companies operate.

This article delves into the potential benefits and risks associated with AI, focusing on what generative AI can do for businesses and tackling the benefits and risks accompanying technological and human progress.

Generative AI Walks the Talk

And speaking of progress, it’s amazing (if not hallucinating) to see how the recently launched GPT-4 has evolved. Only a few months apart from the astonishment caused by ChatGPT in November last year, GPT-4 is on its way to reshape what we thought we knew about AI. Because unlike ChatGPT, which accepts only text, GPT-4 accepts prompts composed of both images and text, returning textual responses. Additionally, GPT-4 outperforms GPT-3.5 (the model on which ChatGPT is built) on most academic and professional exams humans take. Notably, GPT-4 scores in the 90th percentile on the Uniform Bar Exam compared to GPT-3.5, which scores in the 10th percentile. It has obviously become smarter, waking up the whole world to the possibilities it creates. And although generative AI promises to make 2023 one of the most exciting years yet for AI, business leaders should proceed with eyes wide open because it presents many ethical and practical challenges.

Where Does Generative AI Have a Role to Play?

Embedded into the enterprise digital core, generative AI and LLMs will optimize tasks, augment human capabilities, and open new avenues for growth. By enabling the automation of many tasks that humans previously did, generative AI can increase efficiency and productivity, and reduce costs, helping businesses gain a significant competitive advantage.

Although these models are still in the early days of scaling, we are already starting to see the first batch of applications across functions, such as:

Marketing and Sales

Generative AI models can be used to create marketing materials, social media posts, blogs etc., allowing businesses to streamline their content creation process and maintain a consistent brand voice. Moreover, text-to-image programs such as DALL-E, Midjourney, or Stable Diffusion are forever changing how art, animation, gaming, movies, and architecture, among others, are being rendered. Art directors from the advertising world are already using it to create product/brand images to sell products. For example, Nestle used an AI-enhanced version of a Vermeer painting to help sell one of its yoghurt brands.

IT & Engineering

ChatGPT is also a generator of computer program code. For example, Microsoft-owned GitHub Copilot, which is based on OpenAI’s Codex model, suggests code and assists developers in autocompleting their programming tasks. The system has been quoted as being able to autocomplete up to 40% of developers’ code, considerably augmenting their productivity.

Operations

Generative AI can be used to produce task lists for the efficient execution of a given activity and to automate repetitive tasks, such as data entry, report generation, or email responses, freeing up employees to focus on more strategic and creative work.

Discover the trends, challenges and exciting possibilities that AI has to offer in our DRUID Talks podcast!

Risk and Legal

One emerging application of generative AI is the management of text, image, or video-based knowledge within an organization. Creating structured knowledge bases is a labour-intensive process which has made large-scale knowledge management both costly and difficult for many large companies. Thus, generative AI can help answer complex questions, pulling from vast amounts of legal documentation and drafting and reviewing annual reports.

For example, Morgan Stanley is now training GPT-4 to answer financial advisor questions after having fine-tuned it on its wealth management content so that financial advisors can search for existing knowledge within the firm to create tailored content for clients.

Research & Development

AI-driven models can help businesses in industries like pharmaceuticals and materials science explore vast solution spaces and identify potential innovations, accelerating the pace of discovery and development. For example, generative AI can be used to accelerate drug discovery through a better understanding of diseases and the discovery of chemical structures; or to create optimized designs for vehicle components, such as lightweight structures or aerodynamic shapes, leading to improved fuel efficiency, performance, and safety; or even to optimize the design of renewable energy systems, such as solar panels or wind turbines, maximizing their efficiency and output while minimizing costs. Moreover, AI-driven design tools can help researchers develop new robotic components or entire systems, optimizing their structures and functionalities for specific tasks or environments – we’ve come to a point where robots are creating robots themselves!

Conversational AI

LLMs are increasingly being used at the core of conversational AI. They can improve the levels of understanding of conversation and context awareness which can lead to better business problem-solving. Here are some ways in which generative AI is leveraged in conversational AI:

- Natural Language Understanding (NLU) and Natural Language Generation (NLG): Generative AI models help in understanding and extracting meaning from user inputs, allowing conversational AI systems to accurately interpret user intent and context. This enables the system to provide relevant and personalized responses and take appropriate actions based on the user's needs.

- Dialogue Management: Generative AI can be used to build models that manage the flow of conversation, maintain context, and ensure smooth transitions between topics. This helps in creating a coherent and engaging conversational experience for users.

- Continuous learning and adaptation: Generative AI models can learn from user interactions, refining their understanding of language and context over time. This continuous learning allows conversational AI systems to become more effective and accurate in their responses, adapting to the evolving needs of users.

- Multi-format interactions: AI models can be extended to generate content in various formats, such as text, images, or audio, enabling conversational AI systems to interact with users across multiple channels and modalities, improving the overall user experience.

Find out how DRUID is leveraging ChatGPT to help companies overcome the limitations of generative AI.

According to Accenture, companies will use these models to reinvent the way work is done. Every role in every enterprise has the potential to be reinvented as humans working with AI co-pilots becomes the norm, dramatically amplifying what people can achieve. In any given job, some tasks will be automated, some will be assisted, and some will be unaffected by technology. There will also be a large number of new tasks for humans to perform, such as ensuring the accurate and responsible use of new AI-powered systems.

Why Using Generative AI Isn’t Without Risks

Despite the current market downturn and layoffs in the technology sector, generative AI companies continue to generate significant investor interest. That is not surprising when companies such as Sequoia claim that the field of generative AI can generate trillions of dollars in economic value. And although the awe-inspiring results of generative AI might make it seem like a ready-set-go technology, that’s not completely the case. AI researchers are still working out the kinks, and plenty of practical and ethical issues remain open. Here are just a few:

- Like humans, generative AI can be wrong. ChatGPT, for example, sometimes “hallucinates,” meaning it confidently generates entirely inaccurate information in response to a user question and has no built-in mechanism to signal this to the user or challenge the result.

- Ethical concerns: Generative AI can produce content that may be biased, offensive, or inappropriate, potentially causing harm or damaging a company's reputation. Ensuring that AI-generated content adheres to ethical standards and guidelines is crucial for businesses.

- Data privacy: Training generative AI models typically requires large amounts of data, which may include sensitive or personal information. Ensuring data privacy and complying with relevant regulations, such as GDPR, is essential for businesses using AI. The most recent example is Italy’s ban on ChatGPT due to concerns over the illegal collection of personal data and the lack of guardrails to prevent minors from using the chatbot.

- Intellectual property questions are up for debate. When a generative AI model brings forward a new product design or idea based on a user prompt, who can lay claim to it? What happens when it plagiarizes a source based on its training data?

- Misuse or manipulation: Generative AI can be used to create deep fakes or other forms of misinformation, potentially harming businesses by spreading false information or damaging their reputation.

- Security vulnerabilities: As with any technology, AI systems can be vulnerable to cyberattacks or data breaches, posing risks to both the business and its customers.

- Regulatory compliance: As AI becomes more prevalent in business applications, new regulations may emerge, and businesses will need to ensure that their systems comply with these rules.

Limitations of Generative AI in the Enterprise World

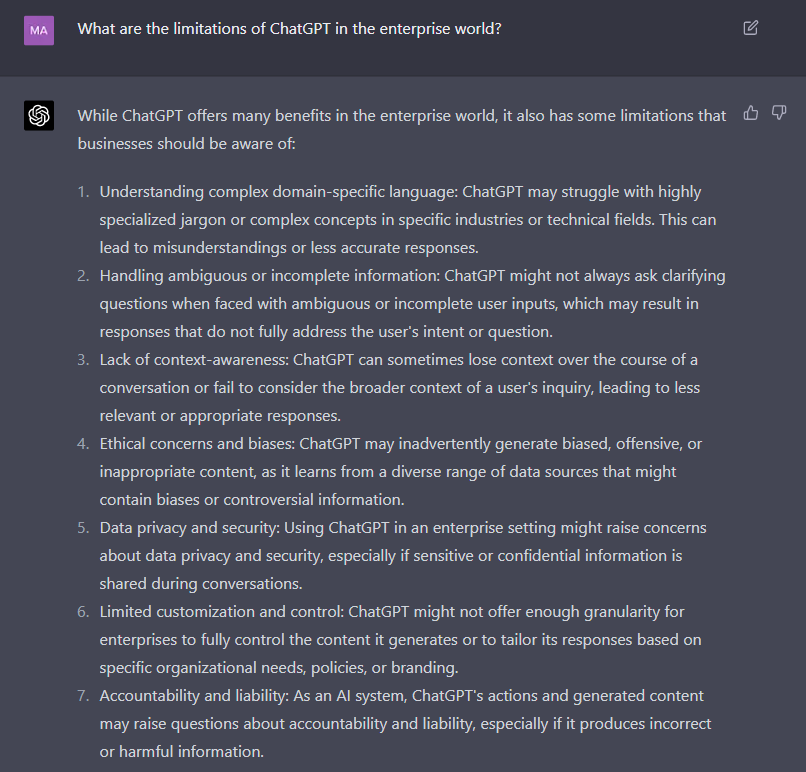

In the enterprise world, things can get even more complicated, as LLMs need to be trained with specific enterprise data, have clear data and security rules in place, and make sure the answers generated by the chatbot aren’t coming from a “black box”. There is growing enthusiasm: nearly 6 in 10 organizations plan to use ChatGPT for learning purposes, and over half are planning pilot cases in 2023. This puts more pressure on further developing technology that adapts to specific business needs and with limitations such as:

Data Privacy and Security Compliance

ChatGPT operates with untraceable data sets that lack transparency and are not fully reliable. At the same time, ChatGPT itself states that the information exchanged in a conversation is not confidential, which is why it recommends not sharing anything sensitive in the chat. In the enterprise world, it’s mandatory to ensure compliance with data protection laws, such as the GDPR, and implement appropriate security measures to protect sensitive and confidential data, cyberattacks and fraud (e.g., financial or medical information). Although generative AI will grow to support enterprise governance and information security, protecting against fraud, improving regulatory compliance, and proactively identifying risk by drawing cross-domain connections and inferences both within and outside the organization, in the short term, however, organizations can expect criminals to capitalize on generative AI’s capabilities to generate malicious code or write the perfect phishing email.

Need for Fine-Tuning

If you need to use ChatGPT for very specific use cases, you may need to fine-tune the model to get what you need. Fine-tuning involves training the model on a specific set of data to optimize its performance for a particular task or objective and can be time-consuming and resource-intensive.

Computational Costs and Power

ChatGPT is a highly complex and sophisticated AI language model that requires substantial computational resources to operate efficiently — which means running the model can be expensive and may require access to specialized hardware and software systems. Organizations should carefully consider their computational resources and capabilities before using ChatGPT. Cloud infrastructure will be essential for deploying generative AI while managing costs and carbon emissions. Data centres will need retrofitting. New chipset architectures, hardware innovations, and efficient algorithms will also play a critical role.

Black-Box Environment

Untraceable data sets used for the model training usually lead to shortcomings in terms of consistency and logical and factual inaccuracies. This means an incomplete understanding of the context, lack of transparency and manageability, bias, and lack of safety and compliance measures. The opposite can be achieved using transparent algorithms, processes, and the implementation of auditing mechanisms that most of the existing Conversational AI platforms have.

Leaving the User to Deal With Uncertainty

The inherited structure of LLM and GPT models can generate inaccurate information in an unpredictable manner. This means that the output can only be utilized in scenarios where the results are evaluated subjectively or where human intervention can rectify any discrepancies. In a business context, responses must be consistent and predictable to maintain a high level of reliability and usability.

Yet, one of the biggest concerns lately is how to better regulate AI in a difficult-to-regulate environment where AI actors' and detractors’ voices are louder than ever. What Accenture suggests is that the rapid adoption of generative AI brings fresh urgency to the need for every organization to have a robust responsible AI compliance regime in place. This includes controls for assessing the potential risk of generative AI use cases at the design stage, and a means to embed responsible AI approaches throughout the business. Their recent 2022 survey of 850 senior executives globally revealed widespread recognition of the importance of responsible AI and AI regulation. Still, only 6% of organizations felt they had a fully robust responsible AI foundation in place.

Furthermore, here's what ChatGPT itself says about his usage limitations in the enterprise world:

Conclusion

“The real problem of humanity is the following: We have Paleolithic emotions, medieval institutions and godlike technology.” (Dr. E.O. WILSON, sociobiologist)

This means that with great technology comes great responsibility. It doesn’t mean we must stop using that technology – this is impossible, as it is trying to pause or slow it down. To mitigate AI’s possible threats, it is essential to develop and adopt responsible AI practices, invest in research to address AI-related challenges and establish policies and regulations that guide the development and deployment of AI technologies in a manner that prioritizes human welfare and ethical considerations.

Despite the challenges, it is clear that generative AI is a rapidly growing field with a lot of potential for innovation and value creation. Businesses are right to be optimistic about the potential of generative AI to radically change how work gets done and what services and products they can create. Still, they also need to be realistic about the challenges that come with profoundly rethinking how the organization functions and can thrive.